With every passing year, employees are adopting AI more than the organisations they work for. Generative AI is spreading through personal accounts and informal workflows instead of IT-approved tools and deployments. Nearly three-quarters of workplace ChatGPT usage comes from personal email addresses. This unapproved usage of AI is known as shadow AI.

Only 28% of organisations have a fully developed AI change management plan, and many are still drafting or building theirs[1]. This widening gap between rapid employee adoption and organisational initiative can be extremely risky for businesses.

What is Shadow AI?

It refers to the use of unapproved AI tools or applications by employees. This approval is usually granted by the Information Technology (IT) department. Despite 40% of employees recalling AI training, 40% still use unapproved tools daily[2].

A general example of unauthorised use of generative AI is ChatGPT, which can generate tasks like text editing or data analysis in seconds. Employees often use these tools to improve productivity and speed up their work. The most frequent applications are content creation at 45%, followed by data analysis at 32%, translation at 28%, and image generation at 18%[3].

But without the IT team’s awareness, these activities can unintentionally expose the organisation to risks. It can weaken data security, regulatory compliance, and reputation.

Causes of Shadow AI

The easy availability of AI tools encourages employees to use unapproved solutions for speed, efficiency, and problem-solving. Here are the possible reasons for this behaviour:

Boosting Everyday Proficiency

Employees use generative AI to automate repetitive work, generate content quickly, and to remove delays from their workflows. Employees mainly use the tools to:

- Draft emails

- Create reports and presentations within seconds

- Generate code snippets

- Summarise long documents

These can be particularly helpful when tight deadlines push them to find quicker options.

Encouraging Experimentation

Shadow AI can give your team the space to test ideas without waiting for formal approval. This flexibility supports creative thinking and experimentation aimed at performance improvement.

Quick Problem Solving

Employees can address issues immediately by using accessible AI tools. This quick response can improve customer service and operational efficiency.

Choosing Convenience Over Procedure

Employees may avoid official policy because artificial intelligence tools feel faster, easier and more accessible than sanctioned alternatives. This behaviour may often happen without malicious intent.

Common Areas of Shadow AI Usage

Although the use of unapproved AI tools may seem helpful, using them without approval poses operational and security risks. Some of the most common types of tools used are:

AI-powered Chatbots

Employees use unapproved chatbots to answer customer queries quickly, which creates inconsistent messaging and risks exposing sensitive information.

Machine Learning (ML) Models for Data Analysis

Analysts use external ML tools to examine company data, gaining insights while unintentionally exposing confidential or proprietary information.

Marketing Automation Tools

Marketing teams adopt unsanctioned tools to automate campaigns or analyse engagement. It can improve performance, but it might risk non-compliance when they process customers’ sensitive data.

Data Visualisation Tools

Staff can create charts and dashboards with the help of AI visualisation platforms. Uploading internal data without IT approval can lead to inaccurate reporting and security vulnerabilities.

Risks and Impact of Shadow AI

Shadow AI introduces risks when employees use unapproved AI tools without organisational approval. These actions can compromise security, compliance and organisational reputation. For instance:

Data Exposure

Employees may paste sensitive legal, financial or strategic information into public AI tools. Many large language models (LLMs) retain user inputs, which can lead to confidentiality breaches or intellectual property theft. Monitoring outbound traffic and using data loss prevention tools can help overcome this risk.

Regulatory Non-Compliance

Using AI tools to process regulated data can violate laws such as HIPAA. Organisations need to track where AI tools handle data to ensure all usage complies with industry regulations and to avoid legal penalties.

Cybersecurity Threats

Unapproved AI applications can carry malware or spyware, exposing corporate systems to data theft or unauthorised access. IT teams should monitor unusual downloads and use security to prevent breaches.

Inaccurate or Ineffective Output

Relying on AI-generated content without verification can cause reputational damage or legal issues. Without incorporating human behavioural insights into content marketing strategies, AI-generated content can feel robotic and lose its relevance. Organisations should review outputs and conduct regular audits to ensure content quality and accuracy.

Ways to Manage the Risks of Shadow AI

Managing shadow AI is not about slowing down innovation. It is about having a guardrail that allows teams to work quickly without undermining the reputation or productivity of a company. You can take several key steps to lower the risks of shadow AI activity.

Define an Effective AI Strategy

Establish clear guidelines for where and how employees can use AI and tie it to business goals for safe experimentation. A structured content strategy ensures adoption is intentional rather than accidental. Create a strategy that accounts for all emerging trends in AI-driven content creation, and get employees to buy in.

Train Employees in Responsible AI Use

Provide formal training on AI tools, risks, and best practices. Well-informed employees will turn experimentation into safe and business-aligned results.

Position IT As a Partner

Ensure your IT team is leading governance, setting permissions, and providing approved platforms. This approach secures sensitive data while allowing innovation to happen openly.

Consolidate Tools and Workflows

Centralise AI capabilities and reduce overlapping applications. This will lower the cost and simplify the process.

Keep Policies Adaptive

Regularly update AI policies to reflect new features, regulatory changes, and evolving vendor integrations. Active, adaptable, updated policies can prevent reactive, ineffective governance.

Engage Employees in AI Decisions

Involve staff through surveys, focus groups, and feedback sessions to understand their needs. Showcasing models built around real user behaviour improves alignment and reduces rogue AI usage.

Prioritise AI Initiatives by Risk and Value

Start with low-risk, high-impact applications to evaluate performance and outcomes. Alongside this, assess expert perspectives on AI-generated content and incorporate the most adaptable and widely accepted practices into the policy.

Managing shadow AI demands strong control, active monitoring, and awareness. Unsanctioned AI tools can expose sensitive data, ignore privacy regulations, and make the security of the company’s information vulnerable. These risks will grow with its increasing usage.

To address these challenges, organisations need to implement structured AI strategies, mandate secure tools, and educate employees on responsible usage. Whether it is creating training modules for AI usage as part of your L&D training or editing content generated by AI tools, Edisol can help your business adopt AI while minimising the risks associated with shadow AI. Contact us today to get started.

Frequently Asked Questions

How does shadow AI emerge within an organisation?

It arises when employees use AI tools independently, attracted by their ease of use. Staff usually adopt these tools without informing IT or seeking formal approval from the company.

What risks does shadow AI pose to organisations?

Using unapproved AI tools creates security gaps, regulatory risks and ineffective content, leading to unexpected expenses.

Why is shadow AI gaining attention now?

As AI tools become more accessible, employees increasingly use them to solve problems and demonstrate productivity.

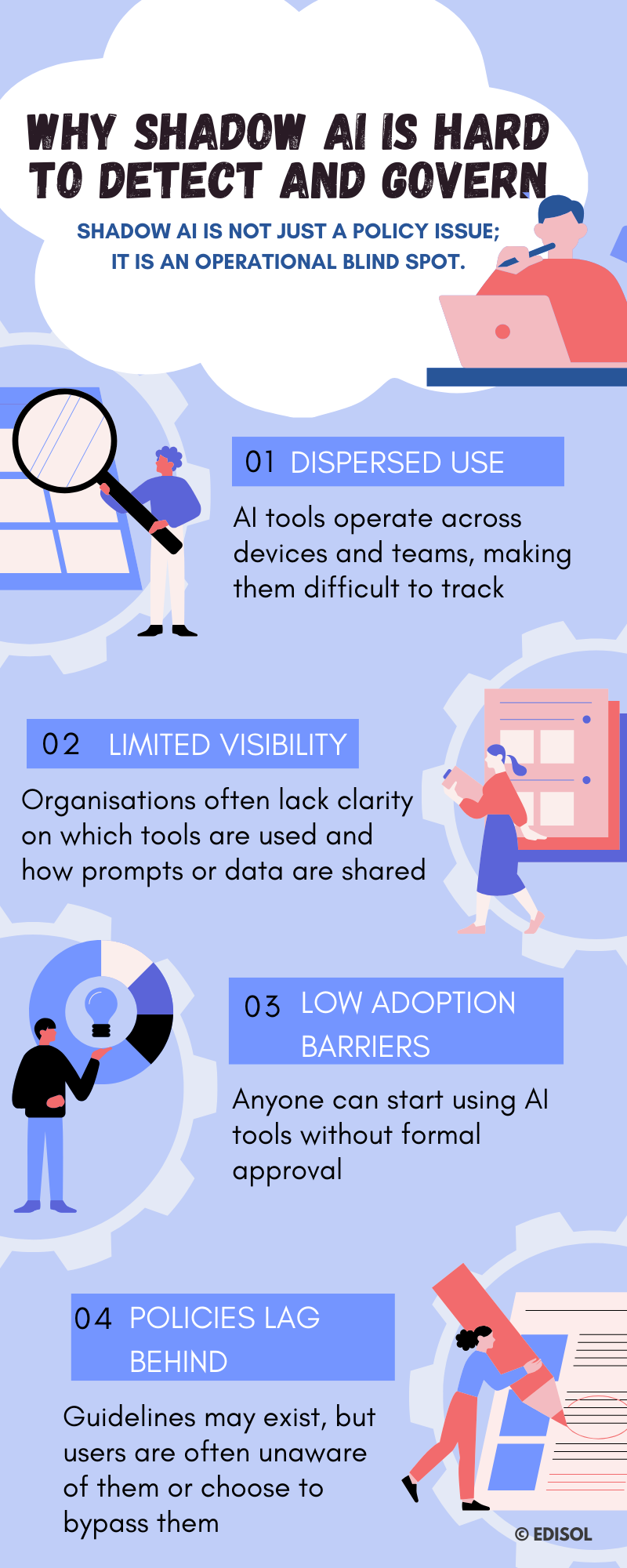

Why does shadow AI pose a challenge for organisations?

Unsanctioned AI use can cause data leaks and compliance risks. It can result in intellectual property loss, regulatory fines, and reputational damage. Operating outside the IT department’s knowledge can make this usage difficult to control beyond a certain point.

How does shadow AI differ from formally approved AI systems?

Conventional AI follows structured IT-led processes to ensure security. Shadow AI ignores these steps by entering the organisation through independent users or departments.

References:

- https://hrdailyadvisor.com/2025/08/21/how-organizations-can-equip-employees-for-the-ai-era/#:~:text=Additionally%2C%20according%20to%20a%202024%20Deloitte%20survey%2C,have%20comprehensive%20change%20management%20plans%20in%20place.

- https://www.upguard.com/resources/the-state-of-shadow-ai

- https://www.intotheminds.com/blog/en/shadow-gpt/#:~:text=Statistics%20and%20concrete%20examples%20of,biased%20decisions%20and%20regulatory%20sanctions.